Regressing to the mean in the pursuit of truth

"IndexError: index 12349 is out of bounds for axis 0 with size 4857." What does that even mean? Based on the numbers, some vector is being smashed together with a vector of a different size. It was 11:45pm on July 22nd and I was 15 minutes away from being done with part 1, a labor of a few months of love. Mao-Lin had left for a work trip and I was cruising to finish this chunk by my self imposed July 22nd deadline. The deadline, even when extremely fake, helps focus the mind and ensures you don't spend forever in a rabbit hole that's besides the point.

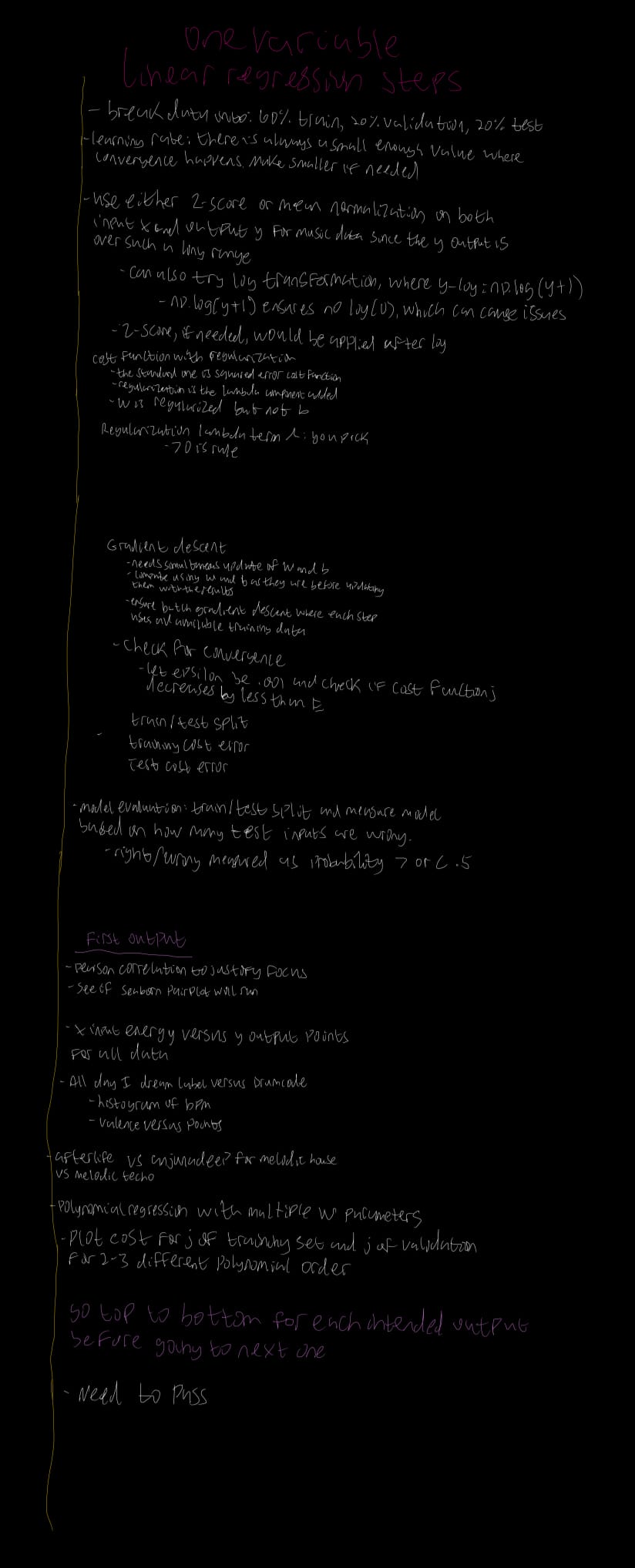

I was doing a final pass of a list I made for what I wanted to touch on in part 1. Train/validate/split? Check. Log transformation? Check. Gradient descent? Check. Polynomial regression? Can be punted to part two. I doubled back, feeling like something was missing but I had no idea what. The learning rate was there for sure, otherwise gradient descent wouldn't have worked. Cost function with regularization? Fuck, did I include regularization? No, I didn't. Damn it. Some things can be thrown overboard but this was something I wanted to be in the habit of early and often. I was going to have to change a bunch of code.

I spent the next hour going piece by piece, adding regularization and making sure each function properly handled it based on what the one before it was doing. Errors cascaded throughout my code, often related to some improper manipulation of an array. That error I mentioned above? It's because those two numbers from two different arrays need to be the same and mine were....very different. Every time an error fired, and I would fix it. This function needs a new way to handle w, that function has lambda as an input parameter and the return statement but not in the actual function definiton. You gotta make sure each component, from cost all the way down to linear fit, is using the right regularized outputs and that a random non-regularized component isn't stuck somewhere in the middle. MTV true life: when you're new to this, there will be something somewhere in the middle that shouldn't be there.

Part 1 is a work of love, the product of several months of work. I spent the vast majority of time, none of which is in the public notebook, simply getting the data organized and ready. Missing values need to be accounted for, different CSVs need to be smashed together and then taken apart and put together with other files in different ways. Due to all those bugs you write, you really get to know your data. DataFrame joins will go sideways because you picked the wrong join ID or didn't handle missing values properly. This metric can't be handled in that way and that categorical field is full of manually typed values that are all over the place. You will scream, more than once, very loudly. But you'll be proud in the end when you get the data to where it needs to be for encoding.

And then encoding is where you will lose your mind again, several times and in multiple directions at once. Encoding is taking your human readable data and transforming it into something machines can handle. All those text values? Those become numbers, whether you like it or not. You need to be very careful at this step, which I learned the hard way several times by being decidedly not careful, borderline belligerent. You need to make sure different types of actual numbers you have that you want to keep the same way (like all my ID fields) don't become scrambled gibberish. If you do nothing, and let numpy work its will, all sorts of fun stuff happens. All those text fields with text values? They can become a bunch of columns with binary values, exploding your data size.

You can intervene, though! You, yes you, have free will and can set the rules for what happens with what data, ensuring it comes through the other side. You can create rules that make sense to you, if you want. You might want certain types of text to become certain types of numbers. I mean, you always want the smallest dataset possible but, when you're new and getting oriented, doing encoding that makes sense to you is perfectly fine. Ignore the grumps online, you're just trying to learn and making it easier for you to follow is important.

At its core, part 1 is a music lover diving into music data, which was honestly a fun way to learn all of this. When you work with data you know, or at least makes sense to you, you're in a stronger position to know if what the model spits out makes any sense. You might not know if you've set your learning rate too high or if you need more iterations on the gradient descent function but you will be able to smell something off it it says your techno data is low energy. No way that All Day I Dream song has that much sadness, I call BS.

The hardest part about all of this is not knowing what's going to happen when you start, especially the very first time doing it. I'm sure, as you get more experience, you'll know much earlier if something is about to go off the rails. As a newb like me? You'll find out at 11:45pm the night before you hope to finish. You learn to keep an open mind and accept that an outcome you don't like doesn't mean the work you did is wasted. The history of tech is littered with stories of cascading failures eventually resulting in success. Also cascading failures that fail in new and innovative ways. Don’t believe me? Look at the history of Google trying to make messaging apps.

While I'm new to machine learning, I'm not new to data. I've been working with data my whole career and there's an important truth: what you think you know and what you actually know are often two different things. Optical illusions abound; you get lost in assumptions and hedges, slowly losing the plot. The pursuit of truth eludes you; is it because you don't want to know it or because you don't know how to find it? People are scared by data because a significant number of people make a lot of money off of you being scared by it.

Certainly, the underlying technical implementations can become complex but, at the end of the day, linear regression is just seeing if two values have a relationship and checking if the relationship means something. Very rarely in my career have I seen instances where something can’t be explained to someone, at least conceptually.

Is there a relationship between any of this data I have? Probably? I hope so? Some combination of model selection, feature selection, and optimized parameters will most likely lead to some outcome that ties different pieces together in either surprising or unsurprising ways. Do I know what that is, right now, close to midnight on July 22nd? Nope. This early on in a project, even when you've produced a ton of outputs, is still exploratory. If your goal is to build a complex model that involves neural networks and forward and backward propagation and waves hand wildly in the direction of deep learning terminology, why would you get nervous about the results this early on? It should influence next steps, for sure. It shouldn't derail you. I've been taking Andrew Ng's Machine Learning Specialization and I've heard him say, at least a few times, that the first time he builds a new model, it doesn't work.

This applies to data projects in general, not just machine learning. You continue chasing different threads, pursuing them until they've been reasonably exhausted. If something new comes along, you change course and see where it goes. Along the way, your enrich your data or throw something overboard. But, no matter what, you keep going. Don't be delusional but don't be deterred. Going back to the start counts as part of keep going. You’re allowed to have bad ideas in data and you’re allowed to spend a lot of time on something that you later determine is a bad idea. As long as you’re not running up against legal, privacy, or data governance constraints, you’re allowed to question assumptions.

Just don't forget your regularization term, though.

Want to know what I'm up to? HMU at hello@uwsthoughts.com.