Project Dolly Shield, Chapter 1, Part 2: The Road to Tokenization is Long and Involves Better Infrastructure

Overview

Dolly Shield Part Two involved a lot of reconfiguring the infrastructure based on the lessons learned in Part One. I've been slowly mastering the basics of deploying an app on Modal, a serverless cloud infrastructure provider. My datasets? Now stored in a private repository on the Hugging Face datasets hub, allowing me to use the Hugging Face dataset mapping and transformers tools more effectively. Number of new errors encountered? Priceless.

In the time between project posts, a new kid rolled onto the block: Deepseek. As a data professional, I concur with the general concern of data privacy controls for PRC-based entities, and I certainly wouldn't just use their consumer chatbot. However, they've also released open source models and I want to see what's up. This means Project Dolly Shield now has two open source models being used: a Meta Llama model and a Deepseek model.

I've also been reading "Hands On Large Language Models" by Jay Alammar and Maarten Grootendorst, a very good book released in the fall of 2024 that walks you through different ways to work with large language models and includes plenty of examples.

Modal Model Download

The reason I chose Modal over competitors like Runpod or Lamda Labs is that Modal lets you deploy an app with all your preferred configurations and doesn't charge you for just setting things up and letting them sit idle. In addition, they have their own cloud storage service, allowing models to be downloaded and stored to their buckets. This means I can download a model and park it nearby, turning off the GPUs when I'm done. Modal also has purpose-built connectors to web UI tools like Vercel, which I intend to use when I get closer to a user-facing deployment.

Global Parameters

If there's one thing I've learned from engineering standups and other sources of best practices, it's that global parameters go in CAPS LOCK. Why? Easier to follow them through and tells the reader they don't self destruct where ever they're seen.

A few notes about the below:

-

Modal supports secret storage and use

-

modal.gpu.A100 is how you define and configure A100 GPUs.

- Is 8 80GB A100s excessive? Eh, depends. I'm working with datasets now that can reach close to 100GB.

-

modal.volume is how you setup a permanent storage environment that can be invoked across multiple scripts. You pay for this sotrage like any other cloud storage.

HF_TOKEN_SECRET = modal.Secret.from_name("HF_TOKEN")

GPU_CONFIG = modal.gpu.A100(size="80GB", count=8)

LLAMA_VOLUME = modal.Volume.from_name("llama-storage", create_if_missing=True)

MODEL_DIR = "/vol/models"

HOURS = 60 * 60

LLAMA_3211B = "meta-llama/Llama-3.2-11B-Vision-Instruct"

SONG_END_TOKEN = '<song_end>'

Image Setup

The image uses a preconfigured one put together by Nvidia and made available for general use. Some people will have a need and a desire to create their own image completely from scratch, most will be just fine with one someone else has made. I then held my finger in the air and loaded in some libraries I thought I was going to need. The image needs the coding language (python) and all the libraries preconfigured. This saves you from what I can only assume is tedious invocations later on.

The image for my Deepseek model is the exact same. Technically, I could probably use the same image for both but I'm finding it easier to manage and tinker if I treat them as entirely separate builds.

LLAMA_IMAGE = (

modal.Image.from_registry(

"nvidia/cuda:12.4.0-devel-ubuntu22.04", add_python="3.11")

.pip_install([

"pandas",

"numpy",

"DeepSpeed",

"matplotlib",

"huggingface_hub",

"datasets",

"transformers",

"torch",

"accelerate",

"sentence_transformers",

"bitsandbytes",

"nltk",

"wandb",

"seaborn",

"faiss-gpu-cu12[fix_cuda]",

"tqdm",

"pyyaml",

])

)

Image Setup Downloading and Saving the Model

This snippet is from a larger download_model function that takes the model from Hugging Face and saves all the files in local modal storage. This means I can later easily change the source code, a key feature of open source models. In a proprietary models (like all those from OpenAI), you feed your data into an API and pay per token (roughly per-word) for text generation. You pay per-token for the model to read per token what you send it and then pay again for the model to respond. You can very easily light a lot of money on fire for no good reason if you're not careful.

With open source, you cut out all those API payments but have to pay for your own GPUs and other infrastructure. You can also futz around with the source code, allowing for a lot more interesting customization.

tokenizer = AutoTokenizer.from_pretrained(model_name, token=hf_token)

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype="auto", token=hf_token)

if SONG_END_TOKEN not in tokenizer.get_vocab():

tokenizer.add_tokens([SONG_END_TOKEN])

tokenizer.save_pretrained(f"{MODEL_DIR}/tokenizer_meta-llama")

model.save_pretrained(MODEL_DIR + "/" + "AutoModelForCausalLM_" + model_name)

try:

LLAMA_VOLUME.commit()

print("llama storage commit all set!", flush=True)

except Exception as e:

print(f"commit failed {e}", flush=True)

Tokenizing Data

If you'll recall, part 1 ended with a beautiful blaze of errors and other sub-optimal results related to tokenization.

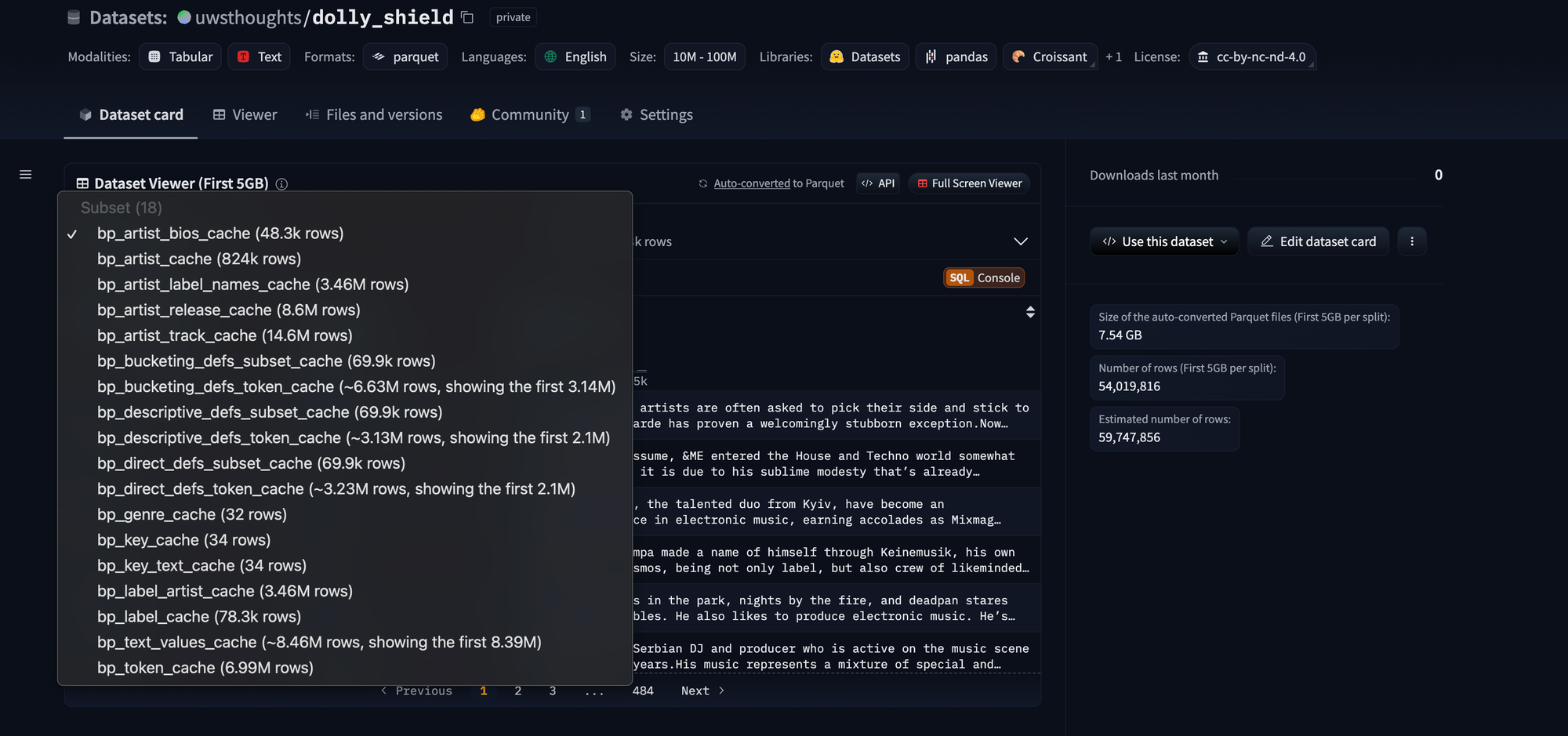

Dataset Configuration

This code:

DATASET_CONFIGS = [

"bp_direct_defs_token_cache",

"bp_direct_defs_subset_cache",

"bp_descriptive_defs_token_cache",

"bp_descriptive_defs_subset_cache",

"bp_bucketing_defs_token_cache",

"bp_bucketing_defs_subset_cache"

]

Is based on this setup using Datasets from Hugging Face, which are very neat and worth the hassle. All the fires I had been flinging around and storing locally are now all in a cloud service that has efficient connectors into the rest of the Hugging Face libraries.

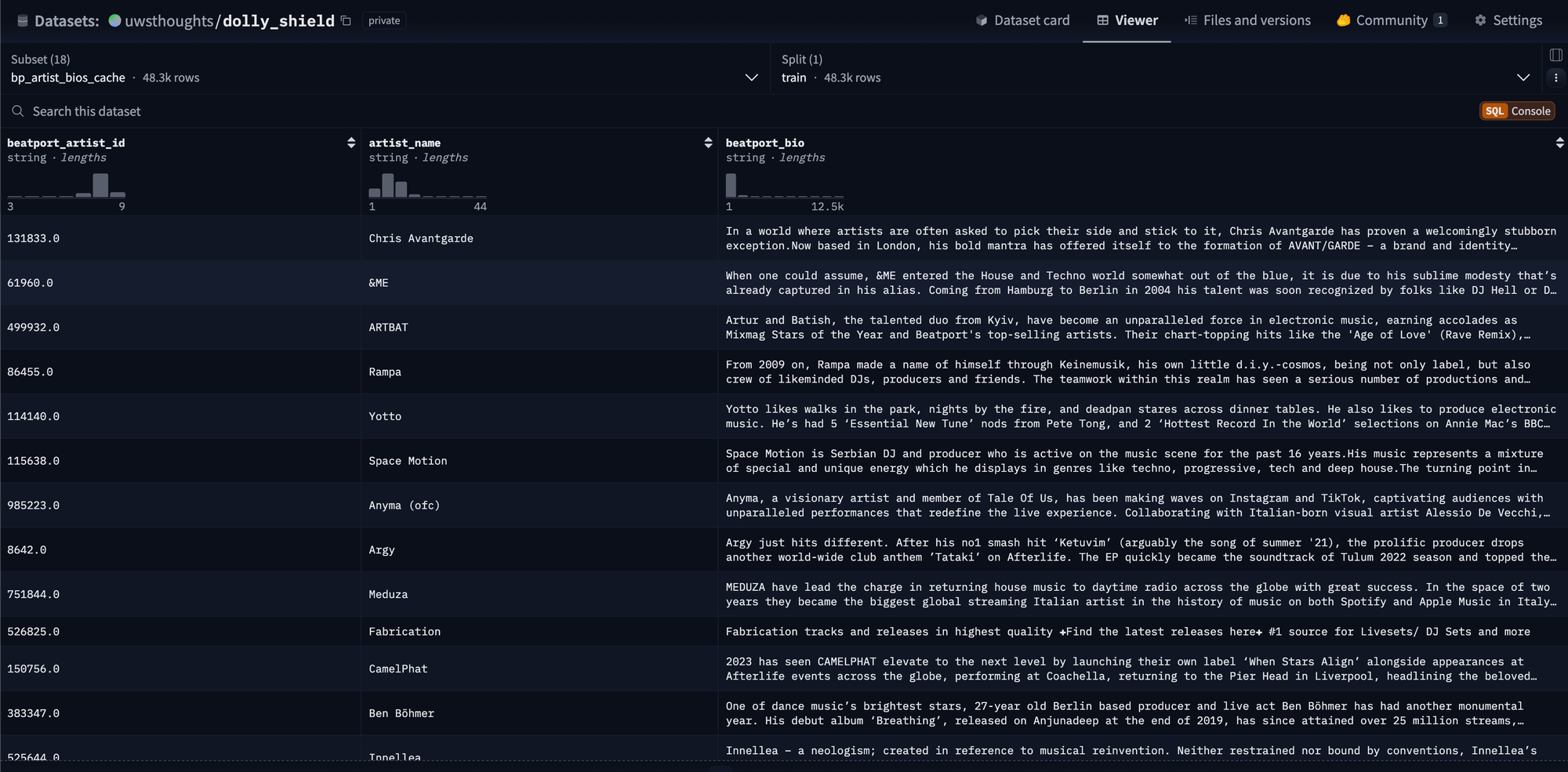

If you so like, you can also view the data in tabular form from the UI:

Actual Tokenization

This snippet loads data from my uwsthoughts Dolly Shield dataset and defines train/test/split using the built in Hugging Face tool.

dataset = load_dataset("uwsthoughts/dolly_shield", name=hf_config, token=hf_token)

train_test_split = dataset["train"].train_test_split(test_size=0.2, seed=42)

test_valid_split = train_test_split["test"].train_test_split(test_size=0.5, seed=42)

data_splits = {

"train": train_test_split["train"],

"test": test_valid_split["test"],

"validation": test_valid_split["train"]

}

This is how the datasets I pass in get converted to tokens and then get mapped back to the words they started. This mapping capability of Hugging Face is extremely popular because it creates clear ways for you to track what is what as you progress through your model. Each large language model has its own tokenizer and may produce extremely similar or very different results.

def token_mapper(example):

tokenized_output = self.tokenizer(example[config_field], truncation=False, padding=True)

return {

"input_text": example[config_field],

"token_text": [self.tokenizer.convert_ids_to_tokens(ids) for ids in tokenized_output["input_ids"]],

"token_ids": tokenized_output["input_ids"],

}

tokenized_splits = {}

for split_name, split_data in data_splits.items():

tokenized_splits[split_name] = split_data.map(token_mapper, batched=True)

for split_name, tokenized_split in tokenized_splits.items():

tokenized_split.push_to_hub(f"uwsthoughts/dolly_shield/token_map_{hf_config}_{split_name}", token=hf_token)

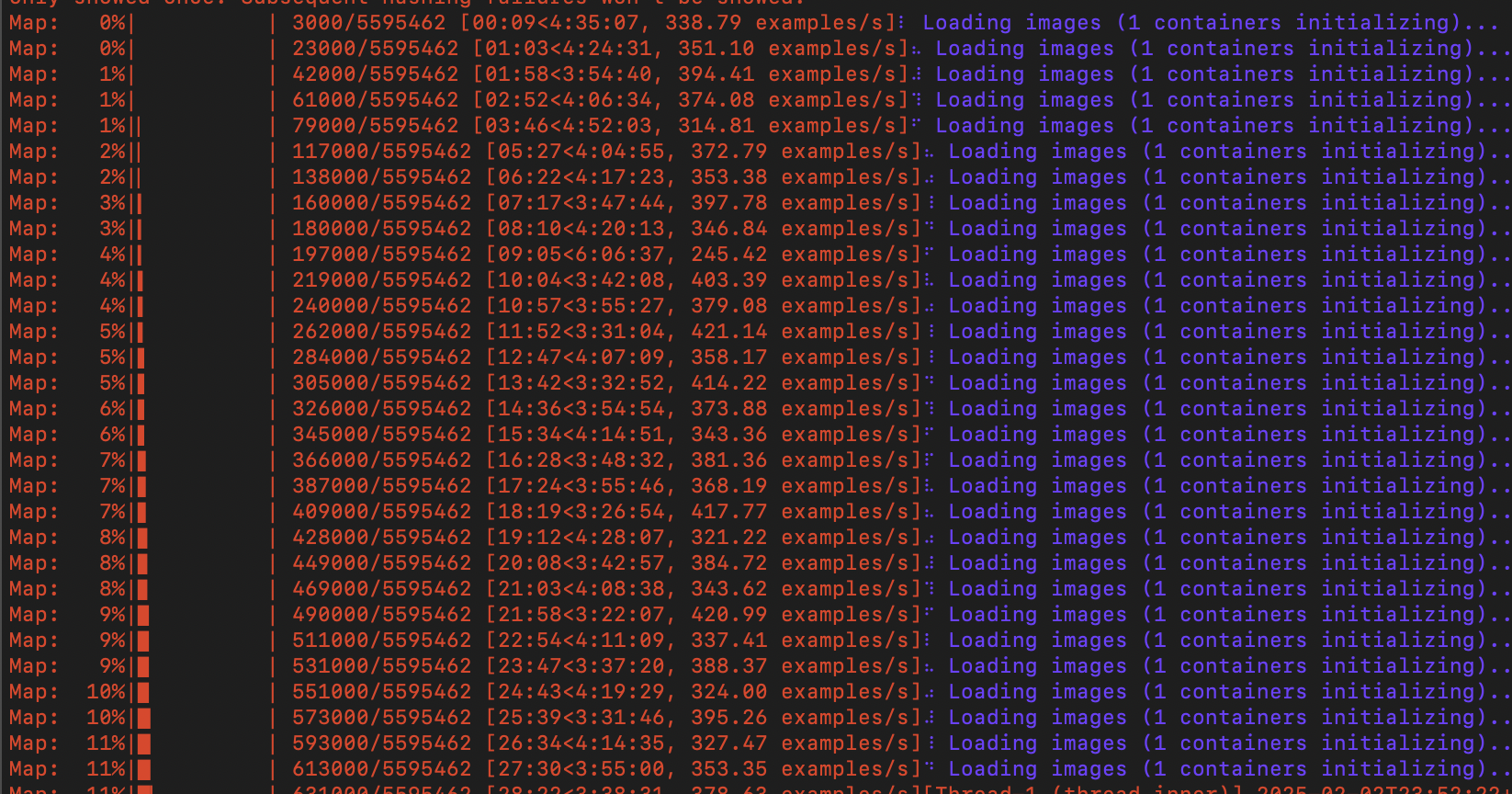

The logs in my terminal show a slow and steady mapping process underway. I don't believe my code currently uses parallel processing effectively, which I suspect is the source of the slowdown. I'll need to read through more documentation and try and determine where the issues lies.

Closing Thoughts

I'm very happy with the progress I've made thus far, even if optimal tokenization remains elusive. I'm more interested in how to effectively process large amounts of data than I am in user-facing outputs at the moment. I'm sure my current script could brute force a few megabytes of data but that's not really the point, you know? Enterprise tech works with petabytes of data. Being able to handle large amounts of data and ensuring the model can do so without wasting time or money is very important. Have I had to light some money on fire along the way to figure this out? Yes. Have I learned a lot of lessons? Yes.

This is another reason why, even if you're interested in eventually doing this at work, it's good to do some projects on your own with non-proprietary data. You can experiment freely and take on the risks yourself. You can learn freely and fail a whole lot more than you might be able to get away with at work. You're spending your own money, which will make you think about costs in a lot more concrete way than just going yolo with the corporate budget.

All this is to say: keep going and don't give up. You'll eventually find someone who believes in you and it will all be worth it.